How AI is Changing the Developer Experience

From Cursor to Claude Code, a story about credits, context, and changing how we code

A couple of weeks ago I accidentally used all my included Cursor credits. They made it pretty easy to do and I had already boosted my included ones. So I thought I would give Claude Code a try.

I must say it feels like we're entering another age of developer experience evolution. I'm spending more time thinking and reviewing than coding these days, and somehow I'm shipping more.

Not abandoning ship

To be clear, I’m still using Cursor for my IDE. I still plan on using Cursor long term. It strikes the right balance of features and UX around the right process of software engineering.

If anyone is listening, the missing features in other IDEs are small and hardly to do with AI:

@gitlet’s you pull context from git specifically and directly@linter errorshelps you redress linting/formatting@web/@docsprovides steerability around documentation

These aren’t deal breakers in other platforms and they aren’t present in Claude Code, but they allow targeted steering of the requests which seem to help me guide the Agent more easily.

Switching from Cursor to Claude Code

I won’t go into how to set up, this isn’t that type of post. You can read about that here.

Throughout this article, I’ve framed this as Claude Code. The proper framing is a terminal based AI Agent. You can use Cursor Background Agent, OpenAI Codex, OpenHands. Whatever you like. This is about the shift in practice, not the tool. Those will keep changing.

There’s some qualia I’m having difficulty describing about changing where you primarily are interacting with the code. It’s like extreme programming and you being a second seat senior developer. You’re guiding a pretty clever, forgetful developer, but sometimes he surprises you with fixing the “got yas” you forgot to specify. AND sometimes he springs off a completely random direction that is shit.

It feels like there are now 3 stages whilst working with this. We’ll start with the first.

Feature Initialization

I find myself spending more time here and at the end. The forgetfulness of the AI agent here is not the problem. It’s more like it’s the first day they’ve seen the codebase, which it is. We’ve taken pains to create documentation of code architecture and practices with pointers to other stores of context.

The CLAUDE.md file acts as a both a global guide of development in our monorepo and a table of contents pointing toward other important context like PROJECT_STRUCTURE.md and STYLE_GUIDE.md. Claude’s clever enough to walk the list if/when it needs to.

So initialization. It represents one of the muscles you have to build by working out. In some fashion it is the same as working with any LLM or agent, give it a clear set of directions.

The trouble arrives with what level of specification you need to provide as context. Context engineering is becoming a thing now, keep up.

I want to minimize the level of specification I have to provide because it takes time and attention. I want to maximize the probability of an acceptable outcome. So time spent thinking and reviewing here before kicking off the agent pays dividends.

Some suggestions for providing Claude Code the right context:

Make sure you have run all their setup instructions

Use the #memory feature a lot!

Place action tokens for commands you want Claude to use

Getting good at this enables you to run Claude Code remotely

Think about and review the context you provide before starting.

Implementation

This stage is more like watching a clever engineer that only has command access fly through intelligently guessing what you meant. When we get down to it, that’s what we’re doing too, just with different tools.

For the first day or two, make sure you watch how it is working and make yourself approve and deny certain cli commands. My team lead and I got into a disagreement about what we were each comfortable with, but it’s a pointless argument because you can specify what its allowed to do.

Press escape anytime its going down a route you don’t want it to do and make a note of how you could steer it away from that the next time. You can also provide it additional instructions mid process and it will catch those on the next stage of its todo call stack.

This step is pretty quick, generally faster than you could do the work yourself. That brings us to the review.

Think and Review

The world has changed. I spend the majority of my time here. When used well, you can have several sessions going at once, but you will be shattered at the end of the day. The context switching does take a toll.

For me, there are 2 stages of review now. The per-commit review where I validate whether Claude did what I asked in an acceptable format and then the PR review where I track my overall objective.

Be ruthless in your per-commit reviews and save as many generalizable memories as you can. Save them in the codebase for later. The per-commit review is important more for your own context than for the Agent. You are holding the program in your head.

This is where you internalize how to steer the agent and can externalize the unwritten instructions for future context. Doing this well matters for your overall impact with using AI SWEs.

A feature on a walk

Here’s a preview of the near future once you have internalized what context the Agent needs and have prepared your codebase.

I live in the UK and we have public footpaths everywhere. To decrease my screentime, I often go for an hour walk in the morning listening to podcasts. Earlier this week I had a different agenda.

I’ve had a UX feature in mind for a while that I struggled describing adequately to assign an engineer. We had a jarring loading screen on one of our apps and the hard transition without transitional ghost elements felt like the loading was taking a long time.

The approach I took was to fire up ChatGPT voice mode and discuss the PRD. I have a pretty good memory of the relevant routes, components and just told Chat to ask me any questions I’m not thinking about. Once it was complete, I asked for a PRD which I copied into a GH Issue.

Next, tagging Claude Code in the Issue began the PR and it was ready for my review when I got home. I didn’t use the exact design it came up with, but it found all the relevant parts that needed to be updated so it was a process of polishing that remained.

This is the future I'm talking about—not AI replacing developers, but developers working in fundamentally different ways.

Impact of Claude Code on my development cycle

This is completely scientific and backed by real data 😉.

It’s similar to using an intelligent IDE like Cursor or Windsurf. I still need the UX around diffs, terminal proximity, intellisense, and the many more features all the IDEs made possible before AI.

Where it differs is how I work with it.

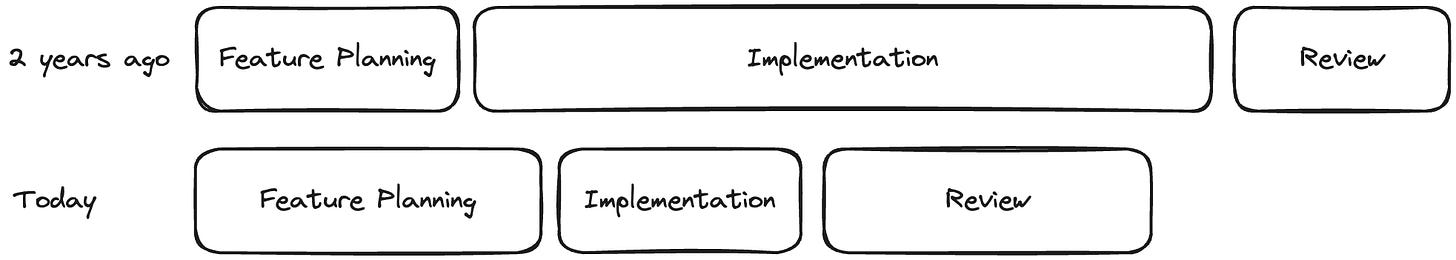

Comparing similar features in the past, I find myself spending more time preparing context for the agent and reviewing its output, but significantly less time in actual implementation. The diagram below illustrates this shift:

The proportions have shifted dramatically. I'm spending roughly:

40% of my time on feature planning and context preparation

20% on implementation (mostly watching and steering)

40% on review and refinement

But note the decrease in total time

The Bitter Lesson

Richard Sutton's "Bitter Lesson" teaches us that two approaches consistently outperform human knowledge: search and learning from data. This principle maps perfectly onto AI-assisted development for my team to build a great product. The challenge is figuring the right mix to scale it.

What doesn't scale:

Writing perfect prompts for every scenario

Trying to anticipate every edge case

Encoding specific programming patterns

Micromanaging the AI's approach

What does scale:

Search capabilities: Let the AI explore your codebase and find patterns. Add these with context/memory

More context/data: Feed it comprehensive documentation, examples, and code history. Helps engineers as well

Learning from corrections: Build systems that capture and reuse your feedback. Make notes and tailor the “implementing algorithm” for you, your team and your codebase

Retrieval over instruction: Help the AI find answers rather than providing them directly. Include references in your context, everywhere. Also MCP

Provide large context space - More examples, more documentation, more code history that is available with clear instructions of how to find it

Time spent building systems that let AI search and learn from your context yields exponentially better returns than crafting the perfect instructions.

This has all been pretext for…

The Real Paradigm Shift

Traditional software teams exist to parallelize development, but they come with a hidden cost: the Principal-Agent Problem. In economics, this describes the inefficiency that arises when one party (the principal) delegates work to another (the agent) who has different incentives and information.

In software development, this manifests everywhere:

Product managers (principals) write specs for developers (agents)

Architects design systems for teams to implement

Senior developers delegate to juniors

Each layer adds interpretation, miscommunication, and divergence from intent

We've tried to solve this with standups, retrospectives, pair programming, and detailed documentation. But these are bandages on a fundamental problem: every human agent has their own context, assumptions, and incentives.

AI Changes the Equation

Here's the thing about AI agents: they have no agenda. They don't get bored, don't have opinions about "boring" work, and don't reinterpret your intent through their own lens. But they are forgetful and need explicit context.

This forced explicitness is a feature, not a bug.

When you prepare context for Claude Code, you're not just instructing an AI—you're crystallizing your actual intent. The same clarity that helps the AI helps your human teammates. Those CLAUDE.md files, PROJECT_STRUCTURE.md docs, and memory entries? They're solving the Principal-Agent Problem by eliminating ambiguity.

The Autonomy Threshold

There's a critical point where your context engineering becomes good enough that the AI can work semi-autonomously. This threshold happens when:

Your documentation captures both explicit rules (how we name things) and implicit patterns (why we structure things this way)

Your feedback loops are tight enough that corrections get captured as memories

The AI has enough examples to interpolate between cases

You trust the system enough to let it run, then review the results

When you cross this threshold, something magical happens: you become a principal who can directly implement your vision without the traditional agent problems.

The New Development Pipeline

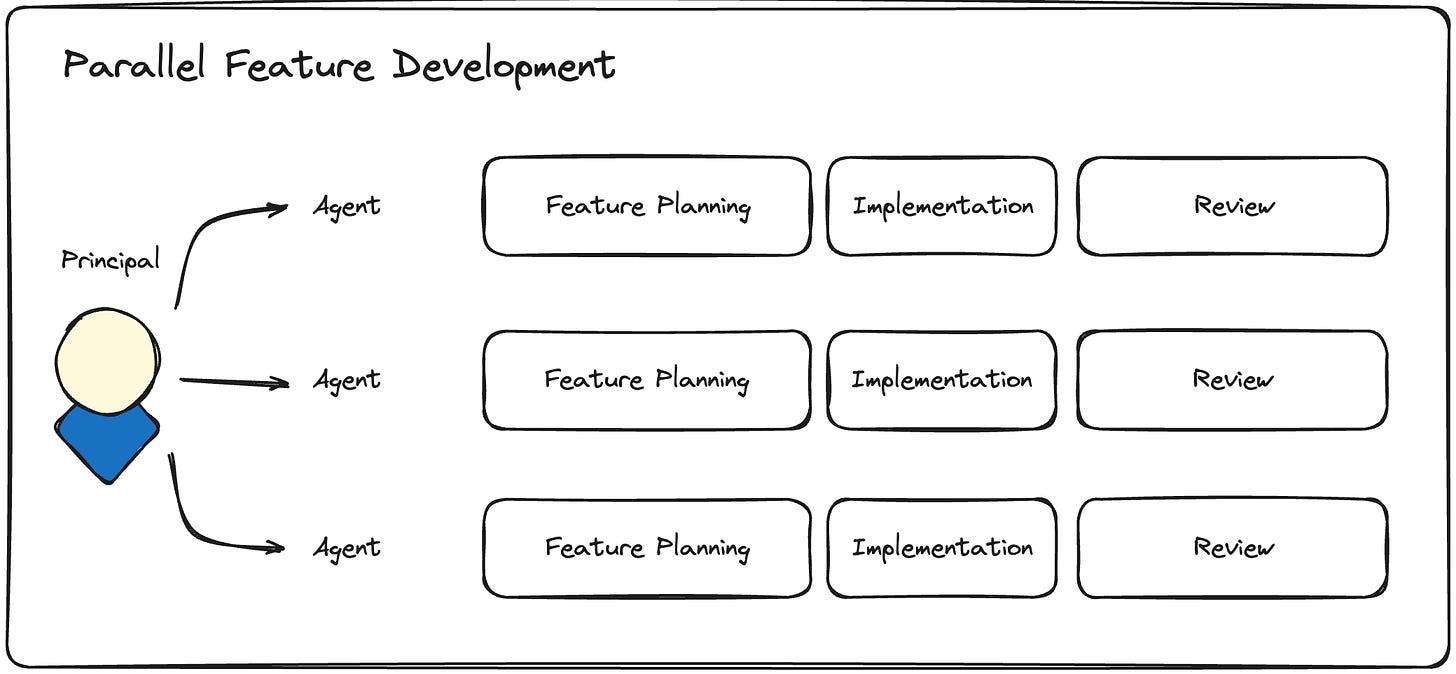

This enables a fundamentally different kind of parallelization. Instead of coordinating multiple human agents (with all their context overhead), you're orchestrating multiple AI sessions as the Principal.

While Claude Code is implementing one feature, I can be:

Reviewing its previous work

Coordinating with teammates about what we should do

Preparing context for the next feature

Running QA on completed work

Making coffee

But here's the key difference: each session starts fresh, with only the context you explicitly provide. No assumptions carried over from the last meeting. No personal interpretation of what you "probably meant." Just pure implementation of your specified intent.

I’ve yet to answer how to get more Principals. How do you increase agency and taste on your team?

Where we're headed

We're witnessing the early stages of a fundamental shift in software development. AI isn't replacing developers, there will be more—it's changing what development means.

My advice for developers:

Start experimenting now - The learning curve is real but manageable

Keep your IDE - You'll need it for everything AI can't handle (yet)

Invest in context engineering - It's the highest-leverage skill in this new paradigm

Document differently - Write for both humans and AI

Embrace the discomfort - The tools that feel weird today define tomorrow's workflows. Keep trying new things and please share!

We are laying down the groundwork and writing the playbooks for the new paradigm now. It also means we can influence the direction it goes. As an industry, how do we want to work?

The bottom line

Two weeks ago, I was frustrated about burning through my Cursor credits. Today, I'm grateful for the accident that pushed me to explore Claude Code. (I mean I could have just bought more, but where’s the fun in that?)

We're in an extraordinary moment where the tools of our trade are evolving faster than our mental models and team dynamics. The developers who thrive will be those who adapt their workflows to leverage AI as a true collaborator, not just a smarter autocomplete.

A lot more software will be written, the way we work together will change, and its perhaps the most fun I’ve had in engineering.

If you liked this post, please subscribe. My aim is to write about what I see as an Engineering Leader as we go through this paradigm shift with AI.

This nails something we see a lot: tools are advancing, but the big win comes when engineers shift how they work, not just what they use. The human + tool combo still picks winners