Engineering KPIs in the Age of AI

Why measuring engineering is still so hard and the Cursor Effect

Shipping software has always been a juggling act: velocity, stability, and alignment with whatever the business pivoted to this week. In 2023‑2025 that juggling act collided with a new variable. AI…everywhere.

Suddenly our engineers weren’t just pushing code; they were pair‑programming with models, rubber‑ducking with Claude, and ghost‑writing tests in Cursor. The ground shifted, and the yardsticks we’d been using—story points, lines of code, raw PR counts—started to look even more prehistoric. (they were prehistoric then)

The KPI problem

What gets measured gets managed. Be very, very sure you like what you’re managing.

Traditional engineering KPIs evolved for a world of waterfall projects and weekly/monthly/semi-yearly deploy trains, with Project Managers instead of Product Managers. They were decent proxies for effort, but terrible proxies for impact:

Story Points / Sprint Velocity: Good for capacity planning and recognizing where slowdowns are happening. Required estimation (intelligent guessing) at the complexity of the work up front and difficult to use as backlogs start to evaporate.

Lines of Code (LoC): Easy to game, inversely correlated with elegance.

PR Count: Rewards shipping tiny tickets over tackling systemic debt. Doesn’t recognize complexity of the update.

A growing concern

Even before we started using ChatGPT and Claude, it was becoming clear that how engineers approached tickets was changing. Metrics like story points relied on a level of upfront task specification that often didn’t match reality. Too much structure limited creativity; too little left work undefined.

Tools like Copilot and later Cursor, amplified this shift. Engineers could move faster, iterate earlier, and revise more freely. Our existing measures—story points and ticket velocity—struggled to keep up. They captured activity, but missed the nuance: the decisions made, the scope adjusted, the judgment exercised in the course of solving a problem.

Enter the Impact Score

Between Q4 ’24 and Q1 ’25 we gave every product engineer Cursor access and wired an AI‐driven rubric straight into GitHub Actions. Every merged PR now passes through the same structured prompt:

// TL;DR of the Impact Prompt

{

"impact_score": 1-5, // Very Low…Very High

"impact_assessment": "…", // Short rationale

"relevant_files": ["…"] // Most‑affected paths

}The LLM evaluates scope, complexity, architectural blast radius, and whether new dependencies are introduced. Engineering leadership reviewed the Impacts to ensure alignment with our Objective here. A reasonable metric for velocity when working with AI.

Why it works:

Complexity‑aware. Ten‑line fixes that de‑risk an outage score higher than cosmetic 200‑line refactors.

Consistent. Same rubric, same scale, across every PR.

Cheap feedback loop. This isn’t something you could do as the number of PRs increase from AI Agents.

A previous career made me very wary of KPIs. Basically I know how they can be gamed and abused and not work towards actual business goals or results. But alas, I see the need for them as an Engineering Leader.

Impact is naturally spiky—shaped by the messy realities of engineering—so I treat the metric with caution. Yet it’s still the most useful gauge I’ve found in our AI-driven journey, and I’m sharing it because few others seem to be talking about it.

The Cursor Effect

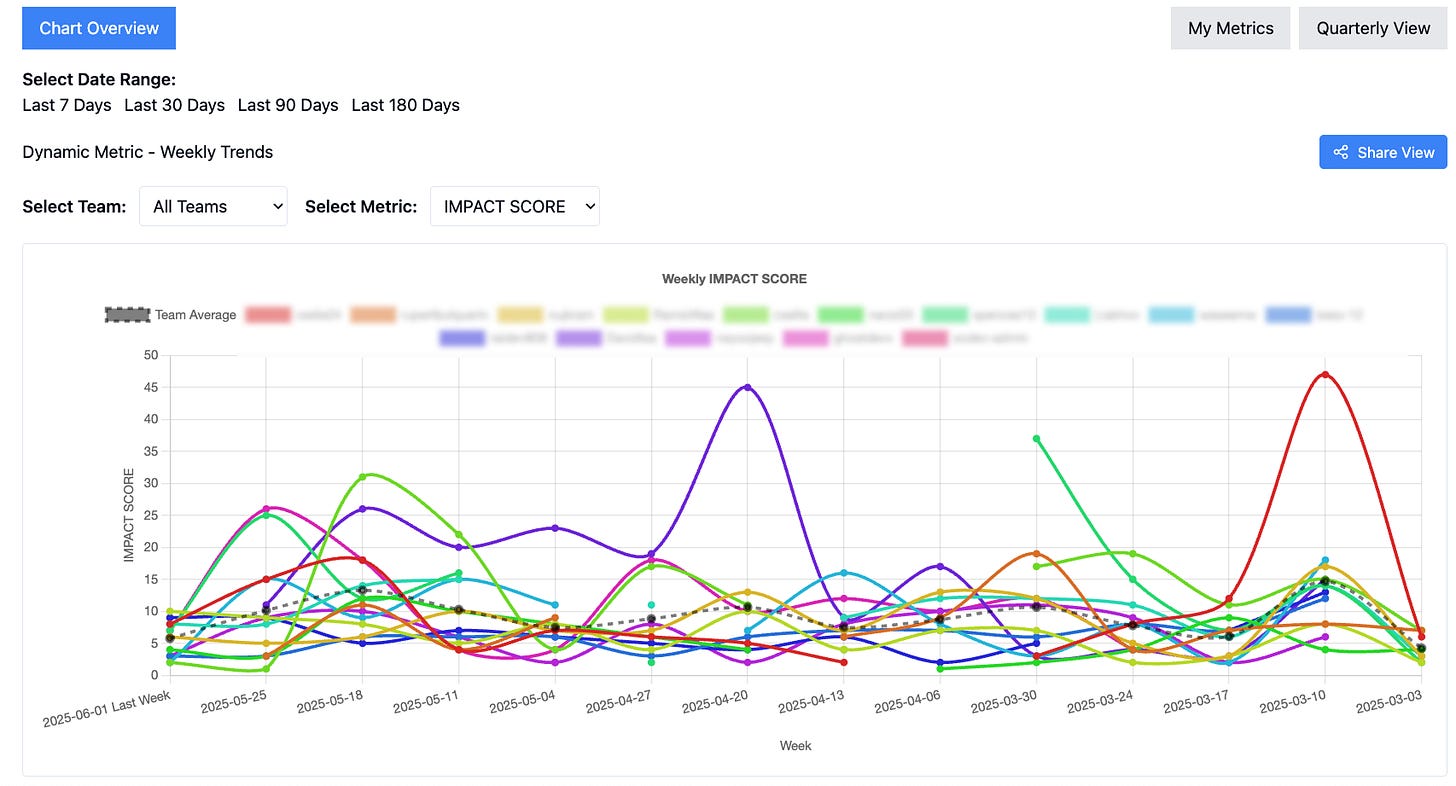

Here’s a quick look at the Impact numbers for our team. I describe it internally as the Cursor Effect.

Q3 '24: 436 PRs, 2.79 avg = 1,217 total impact

Q4 '24: 475 PRs, 2.68 avg = 1,273 total impact

Q1 '25: 712 PRs, 2.80 avg = 1,994 total impact (peak)

Q2 '25: ~650 PRs, ~2.67 avg = ~1,736 total impact (projected)Totals: all product engineering teams, monorepo.

Four things jump out:

Cursor super‑charged exploration in Q1 ’25—both PR count and total impact almost doubled.

Impact per PR barely moved. The AI kept us honest; extra volume didn’t inflate the average. It’s probably within a normal distribution

Q2 contraction is healthy. We spent the quarter paying down sharp edges revealed by Q1’s blitz. Fewer PRs, but still ~1 k Impact Points.

Impact Increase between 36.4% and 56.7%. Keep in mind these are fuzzy numbers and we could bikeshed about any part of this. (I find it useful though)

Early lessons

Velocity doesn’t guarantee value. Doubling the number of PRs only matters if it meaningfully contributes to the product or the business. That’s a subjective call, but broadly, our engineering leadership believes the uptick in PRs added real value. Some of our most impactful contributions came from changes under 20 lines—small updates that unlocked entire workflows.

The Impact Agent gives us consistency by applying the same rubric across all PRs, but we still rely on human judgment to check the outliers. Impact Scores aren’t about grading engineers—they’re there to prompt the right questions. When something stalls, we look at what was being asked and team dynamics, not at the individual. It helps understand what happened and how to move forward next time.

Pitfalls to avoid

As with any metric, it’s easy to create foot guns—ways of using the tool that backfire. We’re aware of anti-patterns and, or when scores are used outside of their intended context. These are the key ones to watch for:

Goodhart’s Law 101: The moment incentives are built around Impact Points, devs will start architecting high‑blast‑radius PRs.

Blind trust in the model: A mis‑parsed diff can skew a score. Keep humans in the loop.

Ignoring business context: A technically elegant refactor is worthless if it can’t be mapped something important for the product.

Where we’re headed next

The nature of roadblocks is shifting. We're no longer bottlenecked by code implementation itself, but by what surrounds it: product clarity, support engineering, pull request review, and feedback integration. One emerging challenge is cohesion—teams losing track of what’s happening across engineering as velocity increases.

Impact × Business KPIs. My headspace is in thinking how to connect these. Current status: I’m still thinking.

RLHF on past merges. Fine‑tune the scoring model with our historical reviews. I tried to pull GH comments, result: we say LGTM a lot.

Real‑time dashboards. Show product managers the impact trendline next to user engagement. I want to expand this to an internal AI Agent we call Stan giving feedback to devs directly.

Final thoughts

AI hasn’t made engineering simpler; it has compressed the time from requirement to code. That speed can tighten feedback loops, but only if we stay intentional, measure impact together, and iterate our process.

In this landscape, the winners aren’t the teams that ship the most code—they’re the ones that discover, validate, and scale impact fastest. The route keeps shifting as old bottlenecks disappear and new ones surface.

Our constraints are moving away from the keyboard toward thinking and review. These steps matter more, and automation is finally giving us the time to do them well.

Impact Score helps us keep a sense of velocity even as traditional backlogs shrink. It has held up as AI engineers join the mix and offers a template for the next hurdles along the way.

It isn’t perfect, but it sparks the right conversations and still points to our only north star: shipping product that matters.

This is refreshing food for thought!

Chris, could you clarify how you built the impact model? Is it just a prompt to start?

Thank you for sharing.