Can an Artifact-First Approach Solve Conversation Quality Problems?

Using Kimi K2's Blueprint for an Evolutionary Reward System

I've been wrestling with a problem in conversation quality: human interactions are too slow to generate the signal we need for meaningful improvement. It's challenging to optimize what feels like an infinite game when we only have finite data to work with.

Then Kimi K2 dropped their research on artifact-first training, and something clicked.

The Infinite Game Problem

Conversations exist in a nearly infinite space yet what is appropriate is actually finite and constrained. A customer support agent or customer could literally say anything at any point, and the conversation could branch in countless directions. Did I mention they can be multithreaded as well?

Traditional customer support approaches try to constrain this with SOPs and training, but there's a massive gap between documented procedures and the implicit knowledge that separates great agents from mediocre ones. All of this and more applies to building AI Agents for Conversations.

Think about humor: it violates our expectations while still conforming to conversation constraints. The joke exists in the split second you think of one answer and another one gets said. Like comedians workshopping material, the best conversationalists know intuitively what fits within implicit boundaries of the conversation while finding novel paths through the interaction space.

Customer support operates the same way. When faced with uncommon scenarios, the best agents find solutions that still feel "right" within the context and stay within the bounds of what is allowed. But how do you build Agents for that from the ground up? How do you even measure it?

K2's Constraint Solution

Kimi K2's breakthrough wasn't just technical, it was conceptual. Instead of trying to optimize conversation quality directly (a non-verifiable, scalar reward), they shifted to generating artifacts that could be functionally tested.

Their insight: artifacts collapse the infinite conversation space into something finite and measurable.

When K2 generates a PowerPoint presentation, interactive diagram, or functional code, the quality becomes verifiable. Does the presentation convey the information clearly? Does the code run? Is it a valid format?

This is brilliant because it sidesteps the fundamental challenge of translating non-verifiable rewards to verifiable ones.

In our case, the artifacts are just a message, but it somehow feels different viewing it from this perspective. It puts the non-verifiability under scrutiny. It allows a rubric-graded chat reward while the rubric changes as well.

Mapping to Conversation Quality

We've been building something similar at XtendOps, what we internally call our self-learning agent system (externalized Q-values, not actual Q-learning). Conversation patterns get tested against each other and prioritized automatically based on implicit feedback from our chat system.

The parallel to K2's approach is striking. Instead of trying to directly optimize "conversation quality" (impossible to verify at scale, large search space), we could generate conversation artifacts at runtime, the message + structured outputs that make quality measurable:

Resolution summaries that capture key decisions and rationale

Knowledge gap analyses that identify what information was missing

Escalation triggers that flag when conversations need human intervention

Outcome predictions that estimate customer satisfaction before the conversation ends

Each artifact provides a concrete evaluation target. Did the summary accurately capture the issue? Did the gap analysis identify the real problem? Did the escalation trigger fire at the right moment?

The Human Conversation Bottleneck

Here's our core problem: we can't generate human conversations fast enough to explore the conversation space meaningfully to iterate the AI Agent. The search space is enormous because conversations really are infinite games, and it takes time to hit specific vector regions where interesting patterns emerge.

Real customer conversations are precious but sparse. We might see thousands of interactions, but only a handful push into new territory. Meanwhile, there are entire regions of conversation space we never explore because the right conditions don't naturally arise.

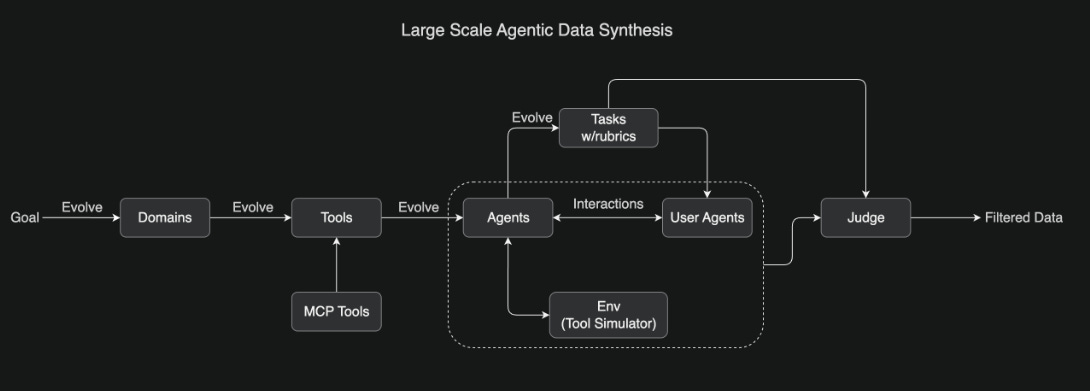

K2's multi-agent self-play training offers a blueprint for solving this. Their approach starts with specific objectives, then generates topic and environment contexts to create diverse scenarios. They integrate both real and simulated tools, build hundreds of agents that compete against each other, and create interactive scenarios. The key is using artifact-based evaluation to filter outputs, enabling continuous improvement through feedback loops where the best strategies naturally emerge. Notice how many times it says Evolve above?

An Evolutionary Reward System

The key insight is using artifacts to enable evolutionary principles in conversation optimization. Instead of waiting for the right human conversations to emerge naturally, we can:

Generate synthetic conversation scenarios at scale, focusing on problem conversation states: escalations, off-topic drift, unresolved issues, tone mismatches. Each synthetic conversation produces artifacts that can be functionally evaluated.

Create selection pressure by measuring artifact quality across different conversation strategies. Ensure these align with real conversations.

Enable rapid iteration by testing conversation variants against each other without customer impact. We can explore edge cases, test new approaches, and identify optimal strategies in synthetic environments before deploying them.

Client-specific adaptation becomes possible when artifacts provide normalized quality metrics. Instead of subjectively judging "good" conversations differently across accounts, we can measure whether artifacts consistently achieve their intended function.

The Self-Judging Mechanism

K2's general reinforcement learning system uses a self-judging mechanism where the model acts as its own critic. For us, this translates to:

The conversation agent generating both the interaction and the evaluation artifacts. It doesn't just respond to the customer. It also produces resolution summaries, identifies knowledge gaps, and predicts outcomes.

Successful patterns (like resolved issues and positive feedback) continuously update the evaluation criteria. This improves how we assess new conversation strategies.

Implementation Reality

This isn't theoretical for us. We're already generating summaries and knowledge gap analyses in our current system. The evolution is extending this artifact generation to cover more aspects of conversation quality and using synthetic generation to accelerate the discovery of effective patterns.

The infinite game of conversation becomes manageable when you focus on the finite artifacts that emerge from it. K2 showed this works for general intelligence tasks. The question now is whether it scales to the specific, nuanced world of conversation quality optimization.

Early indicators suggest it will. The conversation space might be infinite, but the artifacts that matter are surprisingly finite and measurable.

What conversation quality challenges are you facing that might benefit from an artifact-first approach? The methodology feels applicable beyond customer support to any domain where conversation outcomes matter more than conversation content.